By now, most marketers know they need to integrate some kind of testing into their process for developing new promotions and advertising. There are many types of tests you can perform, but A/B and multivariate testing are the most commonly used.

But what is A/B testing and what is multivariate testing? Which one should you choose in order to optimize your web pages? Will the results be affected if you use the wrong test? Both tests can assist you in making data-driven decisions and achieving an increase in conversion rates, but there are many significant distinctions between these tesing methods and their results.

In this post, we are providing an answer to the questions“What is A/B vs. multivariate testing?” explaining the difference between them, and taking a look at the benefits, limitations, methodology, and common uses of these testing methods.

Multivariate & A/B Testing Definitions

The world of A/B testing (split testing) can be confusing, with many people using terms like ‘A/B testing’, ‘A/B/n testing’, or ‘Multivariate testing’ interchangeably. However, these are all different types of tests. Here is a quick rundown:

What Is A/B Testing?

Before we dive into the specifics, we need to start with the most obvious question: “What is A/B testing?” An A/B test compares two versions of something with a single variable changed to see which one performs better in terms of achieving your conversion goals. The two pages should only have one difference between them so that the element in question can be the only factor in a change in the tested metric. This testing technique can be used to contrast two iterations of any kind of marketing material like emails, app UI, landing page, pop-ups, etc.

What is A/B/n testing?

‘A/B’ and ‘A/B/n’ testing are very similar. They are both testing one specific variable change to see the impact of that change. An A/B Test means that you are running two versions of the promo – usually a control and a second version with the change you want to test. An A/B/n test means that you are running multiple versions of the promo – still testing one specific variable, but running multiple variations of that variable change. For example:

- A/B Test: You are testing your call-to-action text. One version says ‘Sign Up!’ and the second version says ‘Join Now!’. You can also test the CTA button size or the page color scheme; for instance, is it better to use a blue button or a green CTA button on a white background?

- A/B/n Test: You are testing the color of your call-to-action button. One version is red, the second version is blue, the third version is green, and so on.

The key difference is simply the number of variations you are testing of that single element!

What Is Multivariate Testing?

A multivariate test is any test that measures the impact of multiple variable changes at once. The hint is in the name itself: multi (many) variates (variables). For example, you can test variations of the call-to-action button color and the text at the same time. This test can help you optimize specific web page elements by revealing which ones have the most effect on engagement. Not to mention find the ultimate combination of elements to hit peak conversion possibility for your target audience.

Why should you run an A/B or Multivariate Test?

In order to run a successful test whether it’s an A/B or multivariate, you must have proper reasoning and intent behind the test. A broad reason for running a test is to optimize for conversions, but you need a deeper and more-detailed purpose if you wish to be successful. Some of the most common reasons for testing are:

- My promotion is underperforming

This is the top reason why most marketers start the testing process.

An underperforming promotion is a real concern to your revenue and is something you can measure.

By running split tests, you can adjust your promotion’s details to gradually raise its efficacy. In addition to enhancing your bottom line, this enables you to offer a better customer experience.

- My brand guidelines changed

This happens more often than you might think. Any time a company goes through a brand refresh, typically promotions will require completely new designs and content.

While A/B testing is a great way to implement these changes quickly, it can also help to guide the brand refresh.

By testing different colors, verbiage, and design styles either one at a time through an A/B test or multiple at once through multivariate, you can guide the overall brand direction through real-time user preference data so your updated look is one that customers will respond best to.

In this case, you would not test the new designs against the originals.

With a brand refresh, you typically have a general guide for where the brand designs and voice will head, but you still need to figure out more granular details for things like font, color palette etc. You’re starting over from square one and your tests should reflect learnings from your previous successes (or failures).

Pitfalls to Avoid When A/B or Multivariate Testing

Before you begin to set up your tests, there are a few things to keep in mind. Primarily, a few pitfalls to avoid…

Pitfall #1: Not checking promotion performance against industry benchmarks

Of course, you want your promotions to outperform the average, but if your promotion is already outperforming industry averages by 20%+, it may be best to test something else in order to reach your goals.

The good news is that your promotion is far above your competition. The bad news is you may do more harm than good by running A/B tests on this promotion. If the promotion is already appealing to your customer’s, then introducing variations may make the performance drop. Instead, try to apply these winning elements to other places on your website or marketing campaigns, for example, certain product photography styles or UGC vs. brand-generated images, etc.

Pitfall #2: Failing to identify a measurable KPI

If you ‘just want this promotion to do better’ then the chances are you haven’t tied that promotion to a KPI that will impact a bottom-line business goal.

To improve the performance of a promotion, you need to keep an eye on what KPIs will be affected so you can accurately assess a change, either positive or negative.

If the attached KPI is to increase the average order value and you run a test and see increased engagements but a decreased average order value, then the test should not be considered a success.

The most common KPIs attached to promotions are

- Conversion rate

- Cart abandonment rate

- Email capture rate

- SMS capture rate

- Average order value

You should determine which KPI you are targeting before launching any tests to ensure that you can properly track the results.

What to A/B or Multivariate test?

Now that you have a better understanding of the basics, we can dig into the most important and most commonly misunderstood aspect of testing – how to structure a reliable A/B or multivariate test.

Elements of a Promotion:

- CTA – The call to action

- Tag Line – Copy to grab visitor attention

- Offer Copy – How your offer is describes

- Offer Amount – The tangible value of the offer

- Image Asset – Image used to represent an offer, brand, etc.

- Number of Steps – How many steps it takes a visitor to complete the engagement

These are the primary testing elements of promotion and therefore your main testing variables.

The offer copy and offer amount are separated because you can change the specific offer amount without actually changing the surrounding copy.

Here’s an example:

- ‘Start today to get 10% off your subscription!’

- ‘Start today to get 15% off your subscription!’

In that example, the ‘Offer Copy’ is the text that your ‘Offer Amount’ is wrapped in. This distinction is important to keep in mind as you move forward into creating actual tests.

The Offer amount is almost always going to have the most significant weight. If you think about this in practical terms you can see why.

For example, would you rather have $5 off a premium car wash or a FREE premium car wash?

All other things being equal, more people are going to be more willing to get the free car wash than only $5 off.

When to run your tests

One of the most important parts of testing implementation is to figure out when it is appropriate to test.

It’s easy to say, ‘Always’. You should always be testing. However, before each test, it’s important to take a step back and ask yourself a few questions: How many visitors are going to see this promotion over the next week? Two weeks? Month? The answer to this question is incredibly important. In an ideal world, you will need a few thousand points of data (i.e. visitors actually running through your test).

Your test should run until it has 1,200 impressions at a minimum to be reasonably confident in the results. With this in mind, your first step is to figure out how many visitors will predictably funnel through your test per day. This number should be easy to get from your Google Analytics or Justuno dashboard if you have your Analytics account linked.

When looking at your traffic numbers, it’s important to keep in mind the type of promotion you are running and the targeting rules for the promotion.

For example, if you’re running an exit offer promotion, then it’s fair to assume that every visitor to your site will see this. If you’re running a banner promotion that only displays on the homepage, then you should be looking at the visitor data for your homepage only and not for your entire site.

With those general rules in hand, you can go into your Google Analytics and look at the page that your promotion will live on, and find the information you want. Go to the Audience tab and set the date range to look at the last 3-6 full months then sort by week. Each data point available on the chart will be a window into your traffic.

Enter each of the data points into a spreadsheet (Google Sheets or Excel will work equally well) and then average those numbers. You now have a reasonably accurate average weekly traffic flow for your site.

Once you have your average visitor count per day, you can calculate the minimum time you’ll need to run your test in order to reach 1,200 impressions.

See our handy chart below for guidelines to get started:

| # of Visitors Per Day | Minimum Length of Test |

| 0-100 | 14 Days (2 Weeks) |

| 101-250 | 14 Days (2 Weeks) |

| 251-500 | 7 Days (1 Week) |

| 501-1000+ | 7 Days (1 Week) |

The minimum length of time you want to run a test is one full week but we usually recommend that you run it for a full month to get an accurate picture. Your test needs to start and stop on the same day and, ideally, at the same time.

This is because even if you have 10,000 visitors per day your site will experience different user behavior on different days of the week. By starting and ending your test on the same day of the week you can help smooth unusual customer behaviors and patterns. The same is true of running it for the full month, there may be holidays or other factors that impact behavior and traffic from week to week causing it to fluctuate. That’s a full month of testing will deliver a more accurate result (unless of course the holiday is part of the test itself!)

A few words of warning:

- Avoid starting a test on a Friday. Should anything go wrong, you may not notice until Monday.

- Start your tests in the morning. For the same reason above, try not to start your tests later in the day.

- Don’t run tests through a holiday as the fluctuation in traffic and buying patterns will throw off your data and invalidate the test. (unless the holiday is an element of the test)

Summary of dos and don’ts:

Do:

- Find your per-day traffic

- Use traffic numbers to determine the length of the test

- Start and stop your test on the same day of the week

Don’t:

- Start an A/B test on a weekend

- Run your A/B test during a holiday

- Start and stop your tests on different days, at different times

Tips for Successful A/B Testing

A/B testing is a methodical process with well-defined objectives. The general pattern of the test remains the same, even though the specifics can change depending on the product or service you are working on. You can use the steps below as an A/B testing guide to make the process easier and more efficient.

Focus on one variable at a time

As you optimize your marketing email campaigns or web pages, you might discover that there are many variables you want to test. However, if you test multiple variables at once, you won’t be able to identify which variable caused the changes in performance. For this reason, you should isolate a single variable and track its performance to determine whether the change you’ve made is effective.

To select your variable, take a look at the components of your marketing resources and the potential alternatives in their style, structure, and phrasing. For instance, if you’re trying to optimize your email campaigns, you might want to test email subject lines, sender names, and various personalization options.

If it makes more sense to test multiple variables rather than a single one, use multivariate testing.

Test on relevant target audience

In some cases, an A/B test may aim to understand the behaviors of particular audience segments. Perhaps you want to exclude current customers from a paid advertising campaign or you want to target a particular customer persona who would be interested in a new product feature.

This is where target audience segmentation comes in. You can divide your audience depending on visitor source, demographics (such as job title, geography, or industry), or behavioral data (organic search, paid ads, etc.). Customer behavior and previous data are two elements that offer crucial context and demonstrate how customers specifically interact with your brand.

Test for long enough

Next, make sure that your test runs for a sufficient amount of time to provide a reliable outcome. How long is long enough? Depending on your business and how the A/B test is carried out, it can take hours, days, or weeks to obtain statistically significant results.

Experts recommend conducting a test for one week to one month. A shorter period of time and there is a chance of receiving a false positive, whereas too long a time period increases the chance of contamination of the representative sample due to cookie erasure.

Analyze your test data

Make sure to integrate your A/B testing software with Google Analytics. Testing tools often only provide top-line data and have limited capacity for data segmentation and slicing.

Keep in mind that averages can be deceiving. If version A outperforms version B by 10, it does not provide the whole picture. By submitting test data from your testing tools to Google Analytics, you can create sophisticated segmentation and unique reports, which can be very helpful when it comes to understanding the impact of your test.

A/B Testing vs. Multivariate Testing

A/B and multivariate testing are very different from one another in a number of respects, even though some of the same principles and technologies are used in both. Here are some of the most important differences:

The number of pages being tested. Multivariate testing can include dozens of different versions of a web page because many possible variable combinations are being tested, whereas an A/B test will only involve two versions of a web page.

Variation combinations. Because there are more combinations of variables, multivariate testing is more complicated than A/B tests. Unlike an A/B test, you are also testing how different variables interact with one another on the page.

Traffic needs. A multivariate test, which can have several-page versions, requires more traffic to achieve statistical significance than an A/B test, which only has two-page versions.

Significant vs subtle changes. A/B tests typically compare vastly different web pages with significant changes. The differences in a multivariate test are less evident since the variations are typically more subtle.

Local optimum vs global optimum. Multivariate testing is typically used to determine the best version of distinct website elements, whereas A/B tests are used to find the best overall page. This is referred to as the local optimum vs. the global optimum.

How long it takes to see results. Since there are only two options being compared and they are both obviously different, an A/B test will yield results much faster. But multivariate tests will find the most effective combinatinon of all the elements faster rather than running sequential A/B tests. It just depends on what your end goal is!

Interpreting the results. Because there are fewer and more drastically varied test pages, the findings of A/B tests are typically easier to understand and interpret.

The Bottom Line

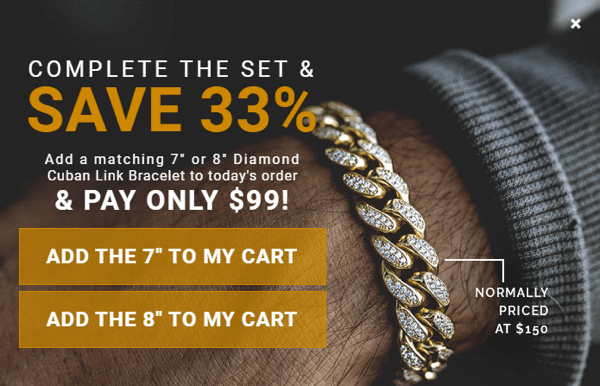

Before you take off and set up your first A/B test in your Justuno account, take a look at a recent case study performed by Shopify Plus jewelry retailer, The GLD Shop. In this A/B Test, The GLD Shop saw an increase in conversions by 300%!

If you’ve made it through this entire article, you now know the answer to the question “What is A/B testing?”, the difference between A/B and multivariate testing, and why they are important. In addition, you have received a crash course in A/B Testing.Don’t think of A/B testing and multivariate testing as being diametrically opposed because of their differences. Consider them as two effective optimization techniques that work best when combined. To get the most out of your website, you can choose one or the other, or combine both. Want to get started testing your website experience? Try Justuno free for 14-days and get a multivariate test set-up today!